Oracle-Listener log解读_oracle listener.log-程序员宅基地

技术标签: oracle log 日志监听文件 【Oracle基础】 数据库 listener-l

Listener log 概述

在ORACLE数据库中,如果不对监听日志文件(listener.log)进行截断,那么监听日志文件(listener.log)会变得越来越大.

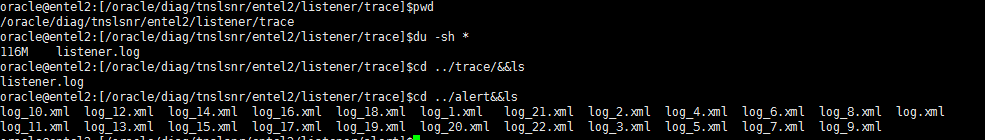

Listener log location

For oracle 9i/10g

在下面的目录下:

$ORACLE_HOME/network/log/listener_$ORACLE_SID.log

For oracle 11g/12c

在下面的目录下:

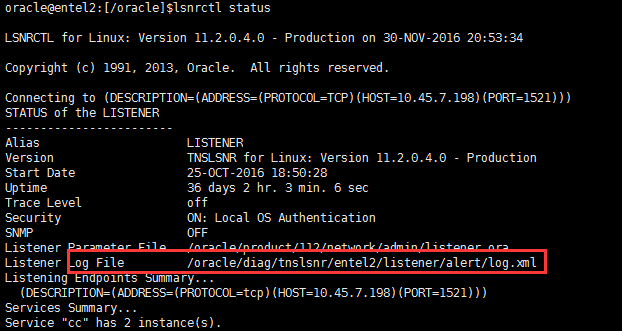

$ORACLE_BASE/diag/tnslsnr/主机名称/listener/trace/listener.log或者通过 lsnrctl status 也可以查看位置

这里展示的是 xml格式的日志,跟.log并无区别。

或者11g可以通过 adrci 命令

oracle@entel2:[/oracle]$adrci

ADRCI: Release 11.2.0.4.0 - Production on Wed Nov 30 20:56:28 2016

Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved.

ADR base = "/oracle"

adrci> help --help可以看帮助命令。输入help show alert,可以看到show alert的详细用法

HELP [topic]

Available Topics:

CREATE REPORT

ECHO

EXIT

HELP

HOST

IPS

PURGE

RUN

SET BASE

SET BROWSER

SET CONTROL

SET ECHO

SET EDITOR

SET HOMES | HOME | HOMEPATH

SET TERMOUT

SHOW ALERT

SHOW BASE

SHOW CONTROL

SHOW HM_RUN

SHOW HOMES | HOME | HOMEPATH

SHOW INCDIR

SHOW INCIDENT

SHOW PROBLEM

SHOW REPORT

SHOW TRACEFILE

SPOOL

There are other commands intended to be used directly by Oracle, type

"HELP EXTENDED" to see the list

adrci> show alert --显示alert信息

Choose the alert log from the following homes to view:

1: diag/clients/user_oracle/host_880756540_80

2: diag/tnslsnr/procsdb2/listener_cc

3: diag/tnslsnr/entel2/sid_list_listener

4: diag/tnslsnr/entel2/listener_rb

5: diag/tnslsnr/entel2/listener

6: diag/tnslsnr/entel2/listener_cc

7: diag/tnslsnr/procsdb1/listener_rb

8: diag/rdbms/ccdg/ccdg

9: diag/rdbms/rb/rb

10: diag/rdbms/cc/cc

Q: to quit

Please select option: 5 --输入数字,查看对应日志

Output the results to file: /tmp/alert_13187_1397_listener_3.ado

2016-06-27 09:15:45.164000 -04:00

Create Relation ADR_CONTROL

Create Relation ADR_INVALIDATION

Create Relation INC_METER_IMPT_DEF

2016-06-27 09:15:46.444000 -04:00

Create Relation INC_METER_PK_IMPTS

System parameter file is /oracle/product/112/network/admin/listener.ora

Log messages written to /oracle/diag/tnslsnr/entel2/listener/alert/log.xml

Trace information written to /oracle/diag/tnslsnr/entel2/listener/trace/ora_16175_140656975550208.trc

Trace level is currently 0

Started with pid=16175

Listening on: (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=10.45.7.198)(PORT=1521)))

Listening on: (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.123.1)(PORT=1521)))

Listener completed notification to CRS on start

......

......

......

Listener log日志文件清理

需要对监听日志文件(listener.log)进行定期清理。

1:监听日志文件(listener.log)变得越来越大,占用额外的存储空间

2:监听日志文件(listener.log)变得太大会带来一些问题,查找起来也相当麻烦

3:监听日志文件(listener.log)变得太大,给写入、查看带来的一些性能问题、麻烦

定期对监听日志文件(listener.log)进行清理,另外一种说法叫截断日志文件。

列举一个错误的做法

oracle@entel2:[/oracle]$mv listener.log listener.log.20161201

oracle@entel2:[/oracle]$cp /dev/null listener.log

oracle@entel2:[/oracle]$more listener.log如上所示,这样截断监听日志(listener.log)后,监听服务进程(tnslsnr)并不会将新的监听信息写入listener.log,而是继续写入listener.log.20161201

正确的做法

1:首先停止监听服务进程(tnslsnr)记录日志。

oracle@entel2:[/oracle]$lsnrctl set log_status off2:将监听日志文件(listener.log)复制一份,以listener.log.yyyymmdd格式命名

oracle@entel2:[/oracle]$cp listener.log listener.log.201612013:将监听日志文件(listener.log)清空。清空文件的方法有很多

oracle@entel2:[/oracle]$echo “” > listener.log

或者

oracle@entel2:[/oracle]$cp /dev/null listener.log

或者

oracle@entel2:[/oracle]$echo /dev/null > listener.log

或者

oracle@entel2:[/oracle]$>listener.log4:开启监听服务进程(tnslsnr)记录日志

oracle@entel2:[/oracle]$lsnrctl set log_status on当然也可以移走监听日志文件(listener.log),数据库实例会自动创建一个listener.log文件。

oracle@entel2:[/oracle]$ lsnrctl set log_status off

oracle@entel2:[/oracle]$mv listener.log listener.yyyymmdd

oracle@entel2:[/oracle]$lsnrctl set log_status on

清理shell脚本

当然这些操作应该通过shell脚本来处理,然后结合crontab作业定期清理、截断监听日志文件。

简单一点的(核心部分)

rq=` date +"%d" `

cp $ORACLE_HOME/network/log/listener.log $ORACLE_BACKUP/network/log/listener_$rq.log

su - oracle -c "lsnrctl set log_status off"

cp /dev/null $ORACLE_HOME/network/log/listener.log

su - oracle -c "lsnrctl set log_status on"

这样的脚本还没有解决一个问题,就是截断的监听日志文件保留多久的问题。比如我只想保留这些截断的监听日志一个月时间,我希望作业自动维护。不需要我去手工操作。有这样一个脚本cls_oracle.sh可以完全做到这个,当然它还会归档、清理其它日志文件,例如告警文件(alert_sid.log)等等。功能非常强大。

#!/bin/bash

#

# Script used to cleanup any Oracle environment.

#

# Cleans: audit_log_dest

# background_dump_dest

# core_dump_dest

# user_dump_dest

#

# Rotates: Alert Logs

# Listener Logs

#

# Scheduling: 00 00 * * * /home/oracle/_cron/cls_oracle/cls_oracle.sh -d 31 > /home/oracle/_cron/cls_oracle/cls_oracle.log 2>

&1

#

# Created By: Tommy Wang 2012-09-10

#

# History:

#

RM="rm -f"

RMDIR="rm -rf"

LS="ls -l"

MV="mv"

TOUCH="touch"

TESTTOUCH="echo touch"

TESTMV="echo mv"

TESTRM=$LS

TESTRMDIR=$LS

SUCCESS=0

FAILURE=1

TEST=0

HOSTNAME=`hostname`

ORAENV="oraenv"

TODAY=`date +%Y%m%d`

ORIGPATH=/usr/local/bin:$PATH

ORIGLD=$LD_LIBRARY_PATH

export PATH=$ORIGPATH

# Usage function.

f_usage(){

echo "Usage: `basename $0` -d DAYS [-a DAYS] [-b DAYS] [-c DAYS] [-n DAYS] [-r DAYS] [-u DAYS] [-t] [-h]"

echo " -d = Mandatory default number of days to keep log files that are not explicitly passed as parameters."

echo " -a = Optional number of days to keep audit logs."

echo " -b = Optional number of days to keep background dumps."

echo " -c = Optional number of days to keep core dumps."

echo " -n = Optional number of days to keep network log files."

echo " -r = Optional number of days to keep clusterware log files."

echo " -u = Optional number of days to keep user dumps."

echo " -h = Optional help mode."

echo " -t = Optional test mode. Does not delete any files."

}

if [ $# -lt 1 ]; then

f_usage

exit $FAILURE

fi

# Function used to check the validity of days.

f_checkdays(){

if [ $1 -lt 1 ]; then

echo "ERROR: Number of days is invalid."

exit $FAILURE

fi

if [ $? -ne 0 ]; then

echo "ERROR: Number of days is invalid."

exit $FAILURE

fi

}

# Function used to cut log files.

f_cutlog(){

# Set name of log file.

LOG_FILE=$1

CUT_FILE=${LOG_FILE}.${TODAY}

FILESIZE=`ls -l $LOG_FILE | awk '{print $5}'`

# Cut the log file if it has not been cut today.

if [ -f $CUT_FILE ]; then

echo "Log Already Cut Today: $CUT_FILE"

elif [ ! -f $LOG_FILE ]; then

echo "Log File Does Not Exist: $LOG_FILE"

elif [ $FILESIZE -eq 0 ]; then

echo "Log File Has Zero Size: $LOG_FILE"

else

# Cut file.

echo "Cutting Log File: $LOG_FILE"

$MV $LOG_FILE $CUT_FILE

$TOUCH $LOG_FILE

fi

}

# Function used to delete log files.

f_deletelog(){

# Set name of log file.

CLEAN_LOG=$1

# Set time limit and confirm it is valid.

CLEAN_DAYS=$2

f_checkdays $CLEAN_DAYS

# Delete old log files if they exist.

find $CLEAN_LOG.[0-9][0-9][0-9][0-9][0-9][0-9][0-9][0-9] -type f -mtime +$CLEAN_DAYS -exec $RM {} \; 2>/dev/null

}

# Function used to get database parameter values.

f_getparameter(){

if [ -z "$1" ]; then

return

fi

PARAMETER=$1

sqlplus -s /nolog <<EOF | awk -F= "/^a=/ {print \$2}"

set head off pagesize 0 feedback off linesize 200

whenever sqlerror exit 1

conn / as sysdba

select 'a='||value from v\$parameter where name = '$PARAMETER';

EOF

}

# Function to get unique list of directories.

f_getuniq(){

if [ -z "$1" ]; then

return

fi

ARRCNT=0

MATCH=N

x=0

for e in `echo $1`; do

if [ ${#ARRAY[*]} -gt 0 ]; then

# See if the array element is a duplicate.

while [ $x -lt ${#ARRAY[*]} ]; do

if [ "$e" = "${ARRAY[$x]}" ]; then

MATCH=Y

fi

done

fi

if [ "$MATCH" = "N" ]; then

ARRAY[$ARRCNT]=$e

ARRCNT=`expr $ARRCNT+1`

fi

x=`expr $x + 1`

done

echo ${ARRAY[*]}

}

# Parse the command line options.

while getopts a:b:c:d:n:r:u:th OPT; do

case $OPT in

a) ADAYS=$OPTARG

;;

b) BDAYS=$OPTARG

;;

c) CDAYS=$OPTARG

;;

d) DDAYS=$OPTARG

;;

n) NDAYS=$OPTARG

;;

r) RDAYS=$OPTARG

;;

u) UDAYS=$OPTARG

;;

t) TEST=1

;;

h) f_usage

exit 0

;;

*) f_usage

exit 2

;;

esac

done

shift $(($OPTIND - 1))

# Ensure the default number of days is passed.

if [ -z "$DDAYS" ]; then

echo "ERROR: The default days parameter is mandatory."

f_usage

exit $FAILURE

fi

f_checkdays $DDAYS

echo "`basename $0` Started `date`."

# Use test mode if specified.

if [ $TEST -eq 1 ]

then

RM=$TESTRM

RMDIR=$TESTRMDIR

MV=$TESTMV

TOUCH=$TESTTOUCH

echo "Running in TEST mode."

fi

# Set the number of days to the default if not explicitly set.

ADAYS=${ADAYS:-$DDAYS}; echo "Keeping audit logs for $ADAYS days."; f_checkdays $ADAYS

BDAYS=${BDAYS:-$DDAYS}; echo "Keeping background logs for $BDAYS days."; f_checkdays $BDAYS

CDAYS=${CDAYS:-$DDAYS}; echo "Keeping core dumps for $CDAYS days."; f_checkdays $CDAYS

NDAYS=${NDAYS:-$DDAYS}; echo "Keeping network logs for $NDAYS days."; f_checkdays $NDAYS

RDAYS=${RDAYS:-$DDAYS}; echo "Keeping clusterware logs for $RDAYS days."; f_checkdays $RDAYS

UDAYS=${UDAYS:-$DDAYS}; echo "Keeping user logs for $UDAYS days."; f_checkdays $UDAYS

# Check for the oratab file.

if [ -f /var/opt/oracle/oratab ]; then

ORATAB=/var/opt/oracle/oratab

elif [ -f /etc/oratab ]; then

ORATAB=/etc/oratab

else

echo "ERROR: Could not find oratab file."

exit $FAILURE

fi

# Build list of distinct Oracle Home directories.

OH=`egrep -i ":Y|:N" $ORATAB | grep -v "^#" | grep -v "\*" | cut -d":" -f2 | sort | uniq`

# Exit if there are not Oracle Home directories.

if [ -z "$OH" ]; then

echo "No Oracle Home directories to clean."

exit $SUCCESS

fi

# Get the list of running databases.

SIDS=`ps -e -o args | grep pmon | grep -v grep | awk -F_ '{print $3}' | sort`

# Gather information for each running database.

for ORACLE_SID in `echo $SIDS`

do

# Set the Oracle environment.

ORAENV_ASK=NO

export ORACLE_SID

. $ORAENV

if [ $? -ne 0 ]; then

echo "Could not set Oracle environment for $ORACLE_SID."

else

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:$ORIGLD

ORAENV_ASK=YES

echo "ORACLE_SID: $ORACLE_SID"

# Get the audit_dump_dest.

ADUMPDEST=`f_getparameter audit_dump_dest`

if [ ! -z "$ADUMPDEST" ] && [ -d "$ADUMPDEST" 2>/dev/null ]; then

echo " Audit Dump Dest: $ADUMPDEST"

ADUMPDIRS="$ADUMPDIRS $ADUMPDEST"

fi

# Get the background_dump_dest.

BDUMPDEST=`f_getparameter background_dump_dest`

echo " Background Dump Dest: $BDUMPDEST"

if [ ! -z "$BDUMPDEST" ] && [ -d "$BDUMPDEST" ]; then

BDUMPDIRS="$BDUMPDIRS $BDUMPDEST"

fi

# Get the core_dump_dest.

CDUMPDEST=`f_getparameter core_dump_dest`

echo " Core Dump Dest: $CDUMPDEST"

if [ ! -z "$CDUMPDEST" ] && [ -d "$CDUMPDEST" ]; then

CDUMPDIRS="$CDUMPDIRS $CDUMPDEST"

fi

# Get the user_dump_dest.

UDUMPDEST=`f_getparameter user_dump_dest`

echo " User Dump Dest: $UDUMPDEST"

if [ ! -z "$UDUMPDEST" ] && [ -d "$UDUMPDEST" ]; then

UDUMPDIRS="$UDUMPDIRS $UDUMPDEST"

fi

fi

done

# Do cleanup for each Oracle Home.

for ORAHOME in `f_getuniq "$OH"`

do

# Get the standard audit directory if present.

if [ -d $ORAHOME/rdbms/audit ]; then

ADUMPDIRS="$ADUMPDIRS $ORAHOME/rdbms/audit"

fi

# Get the Cluster Ready Services Daemon (crsd) log directory if present.

if [ -d $ORAHOME/log/$HOSTNAME/crsd ]; then

CRSLOGDIRS="$CRSLOGDIRS $ORAHOME/log/$HOSTNAME/crsd"

fi

# Get the Oracle Cluster Registry (OCR) log directory if present.

if [ -d $ORAHOME/log/$HOSTNAME/client ]; then

OCRLOGDIRS="$OCRLOGDIRS $ORAHOME/log/$HOSTNAME/client"

fi

# Get the Cluster Synchronization Services (CSS) log directory if present.

if [ -d $ORAHOME/log/$HOSTNAME/cssd ]; then

CSSLOGDIRS="$CSSLOGDIRS $ORAHOME/log/$HOSTNAME/cssd"

fi

# Get the Event Manager (EVM) log directory if present.

if [ -d $ORAHOME/log/$HOSTNAME/evmd ]; then

EVMLOGDIRS="$EVMLOGDIRS $ORAHOME/log/$HOSTNAME/evmd"

fi

# Get the RACG log directory if present.

if [ -d $ORAHOME/log/$HOSTNAME/racg ]; then

RACGLOGDIRS="$RACGLOGDIRS $ORAHOME/log/$HOSTNAME/racg"

fi

done

# Clean the audit_dump_dest directories.

if [ ! -z "$ADUMPDIRS" ]; then

for DIR in `f_getuniq "$ADUMPDIRS"`; do

if [ -d $DIR ]; then

echo "Cleaning Audit Dump Directory: $DIR"

find $DIR -type f -name "*.aud" -mtime +$ADAYS -exec $RM {} \; 2>/dev/null

fi

done

fi

# Clean the background_dump_dest directories.

if [ ! -z "$BDUMPDIRS" ]; then

for DIR in `f_getuniq "$BDUMPDIRS"`; do

if [ -d $DIR ]; then

echo "Cleaning Background Dump Destination Directory: $DIR"

# Clean up old trace files.

find $DIR -type f -name "*.tr[c,m]" -mtime +$BDAYS -exec $RM {} \; 2>/dev/null

find $DIR -type d -name "cdmp*" -mtime +$BDAYS -exec $RMDIR {} \; 2>/dev/null

fi

if [ -d $DIR ]; then

# Cut the alert log and clean old ones.

for f in `find $DIR -type f -name "alert\_*.log" ! -name "alert_[0-9A-Z]*.[0-9]*.log" 2>/dev/null`; do

echo "Alert Log: $f"

f_cutlog $f

f_deletelog $f $BDAYS

done

fi

done

fi

# Clean the core_dump_dest directories.

if [ ! -z "$CDUMPDIRS" ]; then

for DIR in `f_getuniq "$CDUMPDIRS"`; do

if [ -d $DIR ]; then

echo "Cleaning Core Dump Destination: $DIR"

find $DIR -type d -name "core*" -mtime +$CDAYS -exec $RMDIR {} \; 2>/dev/null

fi

done

fi

# Clean the user_dump_dest directories.

if [ ! -z "$UDUMPDIRS" ]; then

for DIR in `f_getuniq "$UDUMPDIRS"`; do

if [ -d $DIR ]; then

echo "Cleaning User Dump Destination: $DIR"

find $DIR -type f -name "*.trc" -mtime +$UDAYS -exec $RM {} \; 2>/dev/null

fi

done

fi

# Cluster Ready Services Daemon (crsd) Log Files

for DIR in `f_getuniq "$CRSLOGDIRS $OCRLOGDIRS $CSSLOGDIRS $EVMLOGDIRS $RACGLOGDIRS"`; do

if [ -d $DIR ]; then

echo "Cleaning Clusterware Directory: $DIR"

find $DIR -type f -name "*.log" -mtime +$RDAYS -exec $RM {} \; 2>/dev/null

fi

done

# Clean Listener Log Files.

# Get the list of running listeners. It is assumed that if the listener is not running, the log file does not need to be cut.

ps -e -o args | grep tnslsnr | grep -v grep | while read LSNR; do

# Derive the lsnrctl path from the tnslsnr process path.

TNSLSNR=`echo $LSNR | awk '{print $1}'`

ORACLE_PATH=`dirname $TNSLSNR`

ORACLE_HOME=`dirname $ORACLE_PATH`

PATH=$ORACLE_PATH:$ORIGPATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:$ORIGLD

LSNRCTL=$ORACLE_PATH/lsnrctl

echo "Listener Control Command: $LSNRCTL"

# Derive the listener name from the running process.

LSNRNAME=`echo $LSNR | awk '{print $2}' | tr "[:upper:]" "[:lower:]"`

echo "Listener Name: $LSNRNAME"

# Get the listener version.

LSNRVER=`$LSNRCTL version | grep "LSNRCTL" | grep "Version" | awk '{print $5}' | awk -F. '{print $1}'`

echo "Listener Version: $LSNRVER"

# Get the TNS_ADMIN variable.

echo "Initial TNS_ADMIN: $TNS_ADMIN"

unset TNS_ADMIN

TNS_ADMIN=`$LSNRCTL status $LSNRNAME | grep "Listener Parameter File" | awk '{print $4}'`

if [ ! -z $TNS_ADMIN ]; then

export TNS_ADMIN=`dirname $TNS_ADMIN`

else

export TNS_ADMIN=$ORACLE_HOME/network/admin

fi

echo "Network Admin Directory: $TNS_ADMIN"

# If the listener is 11g, get the diagnostic dest, etc...

if [ $LSNRVER -ge 11 ]; then

# Get the listener log file directory.

LSNRDIAG=`$LSNRCTL<<EOF | grep log_directory | awk '{print $6}'

set current_listener $LSNRNAME

show log_directory

EOF`

echo "Listener Diagnostic Directory: $LSNRDIAG"

# Get the listener trace file name.

LSNRLOG=`lsnrctl<<EOF | grep trc_directory | awk '{print $6"/"$1".log"}'

set current_listener $LSNRNAME

show trc_directory

EOF`

echo "Listener Log File: $LSNRLOG"

# If 10g or lower, do not use diagnostic dest.

else

# Get the listener log file location.

LSNRLOG=`$LSNRCTL status $LSNRNAME | grep "Listener Log File" | awk '{print $4}'`

fi

# See if the listener is logging.

if [ -z "$LSNRLOG" ]; then

echo "Listener Logging is OFF. Not rotating the listener log."

# See if the listener log exists.

elif [ ! -r "$LSNRLOG" ]; then

echo "Listener Log Does Not Exist: $LSNRLOG"

# See if the listener log has been cut today.

elif [ -f $LSNRLOG.$TODAY ]; then

echo "Listener Log Already Cut Today: $LSNRLOG.$TODAY"

# Cut the listener log if the previous two conditions were not met.

else

# Remove old 11g+ listener log XML files.

if [ ! -z "$LSNRDIAG" ] && [ -d "$LSNRDIAG" ]; then

echo "Cleaning Listener Diagnostic Dest: $LSNRDIAG"

find $LSNRDIAG -type f -name "log\_[0-9]*.xml" -mtime +$NDAYS -exec $RM {} \; 2>/dev/null

fi

# Disable logging.

$LSNRCTL <<EOF

set current_listener $LSNRNAME

set log_status off

EOF

# Cut the listener log file.

f_cutlog $LSNRLOG

# Enable logging.

$LSNRCTL <<EOF

set current_listener $LSNRNAME

set log_status on

EOF

# Delete old listener logs.

f_deletelog $LSNRLOG $NDAYS

fi

done

echo "`basename $0` Finished `date`."

exit

在crontab中设置一个作业,每天晚上凌晨零点运行这个脚本,日志文件保留31天。

00 00 * * * /home/oracle/_cron/cls_oracle/cls_oracle.sh -d 31 > /home/oracle/_cron/cls_oracle/cls_oracle.sh.log 2>&1 智能推荐

java求数组中元素最大值最小值及其下标等相关问题_java、 编写两个方法,分别求出数组中最大和最小元素的下标。如果这样的元素个数大-程序员宅基地

文章浏览阅读9.6k次,点赞9次,收藏39次。功能需求:遍历数组,并求出数组中元素的最大元素,最小元素,及其相应的索引等问题,要求用方法完成. 思路:分别创建不同的方法,然后再调用方法.代码展示:public class Array{ public static void main(String[] args){ int[] arr={13,45,7,3,9,468,4589,76,4}; //声明数组并赋值..._java、 编写两个方法,分别求出数组中最大和最小元素的下标。如果这样的元素个数大

Linux(Ubuntu)中对音频批量转换格式MP3转WAV/PCM转WAV_ubuntu批量mp3转wav命令-程序员宅基地

文章浏览阅读2.2k次。1、批量将MP3格式音频转换成WAV格式利用ffmpeg工具,统一处理成16bit ,小端编码,单通道,16KHZ采样率的wav音频格式。首先新建Mp3ToWav.sh 文件以路径/home/XXX下音频处理为例,编辑如下代码段:#!/bin/bashfolder=/home/XXXfor file in $(find "$folder" -type f -iname "*.mp3..._ubuntu批量mp3转wav命令

用python+graphviz/networkx画目录结构树状图_networkx画树状图-程序员宅基地

文章浏览阅读1.3w次,点赞2次,收藏22次。想着用python绘制某目录的树状图,一开始想到了用grapgviz,因为去年离职的时候整理文档,用graphviz画过代码调用结构图。graphviz有一门自己的语言DOT,dot很简单,加点加边设置属性就这点东西,而且有python接口。我在ubuntu下,先要安装graphviz软件,官网有deb包,然后python安装pygraphviz模块。目标功能是输入一个路径,输出该路径下的_networkx画树状图

【绿色求索T1设备资产通1.5单机版】适用于资产密集型企业管理_求索t1设备资产通系统(单机版)注册码-程序员宅基地

文章浏览阅读899次。绿色求索T1设备资产通 1.5 单机版 [企业管理高价值设备资产的使用情况]下载软件大小:5.56MB软件语言:简体中文软件类别:软件授权:免费软件更新时间:2013-08-03 07:44:00应用平台:Win2K,WinXP,Win2003,Vista,Win7绿色软件下么官方地址:系统之家官网求索T1设备资产通 1.5 单机版 _求索t1设备资产通系统(单机版)注册码

王桂林C语言从放弃到入门课程-程序员宅基地

文章浏览阅读195次。课程目标16天,每天6节课,每节40分钟课堂实录,带你征服C语言,让所有学过和没有学过C语言的人,或是正准备学习C语言的人,找到学习C语言的不二法门。适用人群所有学过和没有学过C语言的人,或是正准备学习C语言的人!

xml实体注入问题_xml注入 内容注入-程序员宅基地

文章浏览阅读618次。xml实体注入问题 https://www.owasp.org/index.php/XML_External_Entity_(XXE)_Prevention_Cheat_Sheet#SAXBuilder_xml注入 内容注入

随便推点

Algorithm Gossip (20) 阿姆斯壮数_actan算法 c++-程序员宅基地

文章浏览阅读543次。Algorithm Gossip: 阿姆斯壮数_actan算法 c++

php中大量数据如何优化,如何对PHP导出的海量数据进行优化-程序员宅基地

文章浏览阅读429次。本篇文章的主要主要讲述的是对PHP导出的海量数据进行优化,具有一定的参考价值,有需要的朋友可以看看。导出数据量很大的情况下,生成excel的内存需求非常庞大,服务器吃不消,这个时候考虑生成csv来解决问题,cvs读写性能比excel高。测试表student 数据(大家可以脚本插入300多万测数据。这里只给个简单的示例了)SET NAMES utf8mb4;SET FOREIGN_KEY_CHECK..._php大数据优化

有道云笔记怎么保存html,有道云笔记如何保存网页 有道笔记保存页面教程-程序员宅基地

文章浏览阅读905次。有道云笔记如何保存网页 有道笔记保存页面教程网页剪报功能支持哪些浏览器?IE,360安全,Firefox,Chrome,搜狗,遨游等主流浏览器。不能收藏网页,原因是没有安装浏览器剪报插件:②点击如下图部门网页剪报”立即体验“。③在弹出”有道云笔记网页剪报“网页对话框,点击如下图”添加到浏览器“。④然后在弹出”确认新增扩展程序“网页对话框中,点击”添加“即可。⑤现在,在浏览器右上角多了一个标记,只需..._有道云笔记装扩展

EasyUI 取得选中行数据-程序员宅基地

文章浏览阅读63次。转自:http://www.jeasyui.net/tutorial/23.html本实例演示如何取得选中行数据。数据网格(datagrid)组件包含两种方法来检索选中行数据:getSelected:取得第一个选中行数据,如果没有选中行,则返回 null,否则返回记录。getSelections:取得所有选中行数据,返回元素记录的数组数据。创建数据网格(DataGrid)<..._easyui 获取table选中的一行的值

云上武功秘籍(三)华为云上部署金蝶EAS Cloud_云上部署含带宽-程序员宅基地

文章浏览阅读1k次。每天琐事缠身,查错、维护、开接口?——不,你可以更加富有创造力!假期千里迢迢飞回公司机房处理一个小故障?——不,你可以更加自由高效!如果这就是你的写照,那为什么不选择上云呢?如果要上云,那为什么不选择华为云呢?云上秘籍第三弹——超详细、超全面的金蝶EAS Cloud部署教程来啦!负载均衡?WEB安全?一篇文章全部搞定!最后,请大家相信我们华为云生态 ISV团队的诚意和实力,谢谢!_云上部署含带宽

京东OLAP实践之路-程序员宅基地

文章浏览阅读640次。导读:本文主要介绍京东在构建OLAP从无到有各环节考虑的重点,由需求场景出发,剖析当前存在的问题,并提供解决方案,最后介绍OLAP的发展过程。▌需求场景1. 京东数据入口① 业务数据:订单..._京东 风控flink实践之路