2016:DianNao Family Energy-Efficient Hardware Accelerators for Machine Learning-程序员宅基地

技术标签: pr

文章目录

- 这个发表在了

- https://cacm.acm.org/

- communication of the ACM

- 上

- 参考文献链接是

Chen Y , Chen T , Xu Z , et al.

DianNao Family: Energy-Efficient Hardware Accelerators for Machine Learning[J].

Communications of the Acm, 2016, 59(11):105-112

- https://cacm.acm.org/magazines/2016/11/209123-diannao-family/fulltext

- 果然找到了他

- 特码的,我下载了,6不6

The original version of this paper is entitled “DianNao: A

Small-Footprint, High-Throughput Accelerator for Ubiq-

uitous Machine Learning” and was published in Proceed-

ings of the International Conference on Architectural Support

for Programming Languages and Operating Systems (ASPLOS)

49, 4 (March 2014), ACM, New York, NY, 269–284.

Abstract

- ML pervasive

- broad range of applications

- broad range of systems(embedded to data centers)

- broad range of applications

- computer

- toward heterogeneous multi-cores

- a mix of cores and hardware accelerators,

- designing hardware accelerators for ML

- achieve high efficiency and broad application scope

第二段

- efficient computational primitives

- important for a hardware accelerator,

- inefficient memory transfers can

- potentially void the throughput, energy, or cost advantages of accelerators,

- an Amdahl’s law effect

- become a first-order concern,

- like in processors,

- rather than an element factored in accelerator design on a second step

- a series of hardware accelerators

- designed for ML(nn),

- the impact of memory on accelerator design, performance, and energy.

- representative neural network layers

- 450.65x over GPU

- energy by 150.31x on average

- for 64-chip DaDianNao (a member of the DianNao family)

1 INTRODUCTION

- designing hardware accelerators which realize the best possible tradeoff between flexibility and efficiency is becoming a prominent

issue.

- The first question is for which category of applications one should primarily design accelerators?

- Together with the architecture trend towards accelerators, a second simultaneous and significant trend in high-performance and embedded applications is developing: many of the emerging high-performance and embedded applications, from image/video/audio recognition to automatic translation, business analytics, and robotics rely on machine learning

techniques. - This trend in application comes together with a third trend in machine learning (ML) where a small number

of techniques, based on neural networks (especially deep learning techniques 16, 26 ), have been proved in the past few

years to be state-of-the-art across a broad range of applications. - As a result, there is a unique opportunity to design accelerators having significant application scope as well as

high performance and efficiency. 4

第二段

- Currently, ML workloads

- mostly executed on

- multicores using SIMD[44]

- on GPUs[7]

- or on FPGAs[2]

- the aforementioned trends

- have already been identified

- by researchers who have proposed accelerators implementing,

- CNNs[2]

- Multi-Layer Perceptrons [43] ;

- accelerators focusing on other domains,

- image processing,

- propose efficient implementations of some of the computational primitives used

- by machine-learning techniques, such as convolutions[37]

- There are also ASIC implementations of ML

- such as Support Vector Machine and CNNs.

- these works focused on

- efficiently implementing the computational primitives

- ignore memory transfers for the sake of simplicity[37,43]

- plug their computational accelerator to memory via a more or less sophisticated DMA. [2,12,19]

- efficiently implementing the computational primitives

第三段

-

While efficient implementation of computational primitives is a first and important step with promising results,

inefficient memory transfers can potentially void the throughput, energy, or cost advantages of accelerators, that is, an

Amdahl’s law effect, and thus, they should become a first-

order concern, just like in processors, rather than an element

factored in accelerator design on a second step. -

Unlike in processors though, one can factor in the specific nature of

memory transfers in target algorithms, just like it is done for accelerating computations. -

This is especially important in the domain of ML where there is a clear trend towards scaling up the size of learning models in order to achieve better accuracy and more functionality. 16, 24

第四段

- In this article, we introduce a series of hardware accelerators designed for ML (especially neural networks), including

DianNao, DaDianNao, ShiDianNao, and PuDianNao as listed in Table 1. - We focus our study on memory usage, and we investigate the accelerator architecture to minimize memory

transfers and to perform them as efficiently as possible.

2 DIANNAO: A NN ACCELERATOR

- DianNao

- first of DianNao accelerator family,

- accommodates sota nn techniques (dl ),

- inherits the broad application scope of nn.

2.1 Architecture

- DianNao

- input buffer for input (NBin)

- output buffer for output (NBout)

- buffer for synaptic(突触) weights (SB)

- connected to a computational block (performing both synapses and neurons computations)

- NFU, and CP, see Figure 1

NBin是存放输入神经元

SB是存放突触的权重的

这个NBout是存放输出神经元

我觉得图示的可以这样理解:2个输入神经元,2个突触,将这2个对应乘起来,输出是1个神经元啊。但是我的这个NFU牛逼啊,他可以一次性求两个输出神经元。

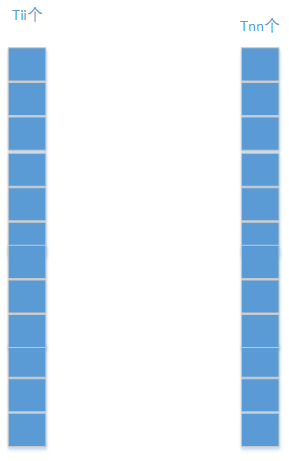

NFU

- a functional block of T i T_i Ti inputs/synapses(突触)

- T n T_n Tn output neurons,

- time-shared by different algorithmic blocks of neurons.

这个NFU对 T i T_i Ti个输入和突触运算,得到 T n T_n Tn个输出神经元,突触不是应该是 T i × T n T_i\times T_n Ti×Tn个吗??,

- Depending on the layer type,

- computations at the NFU can be decomposed in either two or three stages

- For classifier and convolutional:

- multiplication of synapses × \times × inputs:NFU-1

- , additions of all multiplications, :NFU-2

- sigmoid. :NFU-3

如果是分类层或者卷积的话的话,那就是简单的突触 × \times × 输入,然后加起来,求sigmoid。这个我能理解哦,这种情况不就是卷积吗。

如果是分类层,那么输入就是

- last stage (sigmoid or another nonlinear function) can vary.

- For pooling, no multiplication(no synapse),

- pooling can be average or max.

- adders(加法器) have multiple inputs,

- they are in fact adder trees,

- the second stage also contains

- shifters and max operators for pooling.

要啥移位啊??

- the sigmoid function (for classifier and convolutional layers)can be efficiently implemented using ( f ( x ) = a i x × + b i , x ∈ [ x i , x i + 1 ] f(x) = a_i x \times + b_i , x \in [x_i , x_{i+1} ] f(x)=aix×+bi,x∈[xi,xi+1]) (16 segments are sufficient)

On-chip Storage

- on-chip storage structures of DianNao

- can be construed as modified buffers of scratchpads.

- While a cache is an excellent storage structure for a general-purpose processor, it is a sub-optimal way to exploit reuse because of the cache access overhead (tag check, associativity, line size, speculative read, etc.) and cache conflicts.

- The efficient alternative, scratchpad, is used in VLIW processors but it is known to be very difficult to compile for.

- However a scratchpad in a dedicated accelerator realizes the best of both worlds: efficient

storage, and both efficient and easy exploitation of locality because only a few algorithms have to be manually adapted.

第二段

- on-chip storage into three (NBin, NBout,and SB), because there are three type of data (input neurons,output neurons and synapses) with different characteristics (read width and reuse distance).

- The first benefit of splitting structures is to tailor the SRAMs to the appropriate

read/write width, - and the second benefit of splitting storage structures is to avoid conflicts, as would occur in a cache.

- Moreover, we implement three DMAs to exploit spatial locality of data, one for each buffer (two load DMAs for inputs, one store DMA for outputs).

2.2 Loop tiling

- DianNao 用 loop tiling去减少memory access

- so可容纳大的神经网络

- 举例

- 一个classifier 层

- 有 N n N_n Nn输出神经元

- 全连接到 N i N_i Ni的输入

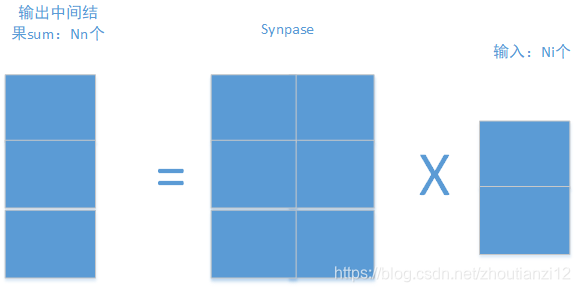

- 如下图

- 一个classifier 层

N n N_n Nn个输出, N i N_i Ni个输入,sypase应该是 N n × N i N_n\times N_i Nn×Ni大小,用这个矩阵 × N i \times N_i ×Ni即可得到结果啊

- 先取出来一块

- 有点疑惑啊

- 万一右边第一个元素和左边全部元素都有关

- 你咋算啊 ()

- 其实啊,我他妈算右边第一个时候

- 只需要用到和synapse的一行呀!

- 那你那个大大的synapse矩阵咋办啊

- 下面是原始代码和和

- tiled代码

- 他把分类层映射到DianNao

for(int n=0;n<Nn;n++)

sum[n]=0;

for(int n=0;n<Nn;n++) //输出神经元

for(int i=0;i<Ni;i++) //输入神经元

sum[n]+=synapse[n][i]*neuron[i];

for(int n=0;n<Nn;n++)

neuron[n]=Sigmoid(sum[n]);

- 俺的想法:

- 一次来Tnn个输出

- 和Tii个输入

- 然后这个东西对于硬件还是太大了

- 再拆

- 来Tn个和Ti个吧

- 就酱

for(int nnn=0;nnn<Nn;nnn+=Tnn){

//tiling for output 神经元

//第一个for循环准备扔出去Tnn个输出

for(int iii=0;iii<Ni;iii+=Tii){

//tiling for input 神经元

//第二个for循环准备扔进来Tii个输入

//下面就这两个东西动手

for(int nn=nnn;nn<nnn+Tnn;nn+=Tn){

//第三个for循环觉得觉得Tnn还是太大了,继续拆

//大小是Tn

//那么我们对每一个Tnn块!(开始位置是nn哦!!)

//我们如下求解

///

for(int n=nn;n<nn+Tn;n++)

//第一步把中间结果全部搞成零!

sum[n]=0;

//为求sum[n],sum[n]=synapse的第n行乘neuron的全部啊!

for(int ii=iii;ii<iii+Tii;ii+=Ti)

//上面的for是对Ti进行拆

for(int n=nn;n<nn+Tn;n++)

for(int i=ii;i<ii+Ti;i++)

sum[n]+=synapse[n][i]*neuron[i];

for(int nn=nnn;nn<nnn+Tnn;nn+=Tn)

neuron[n]=sigmoid(sum[n]);

///

} } }

- 在tiled代码中, i i ii ii和 n n nn nn

- 表示NFU有 T i T_i Ti个输入和突触

- 和 T n T_n Tn个输出神经元

- 表示NFU有 T i T_i Ti个输入和突触

- 输入神经元被每个输出神经元需要重用

- 但这个输入向量也太他妈大了

- 塞不到Nbin块里啊

- 所以也要对循环 i i ii ii分块,因子 T i i T_{ii} Tii

上面的代码肯定有问题,正确的如下:

for (int nnn = 0; nnn < Nn; nnn += Tnn) {

for (int nn = nnn; nn < nnn + Tnn; nn += Tn) {

for (int n = nn; n < nn + Tn; n++)

sum[n] = 0;

for (int iii = 0; iii < Ni; iii += Tii) {

for (int ii = iii; ii < iii + Tii; ii += Ti)

for (int n = nn; n < nn + Tn; n++)

for (int i = ii; i < ii + Ti; i++)

sum[n] += synapse[n][i] * neuron[i];

}

for (int n = nn; n < nn + Tn; n++)

printf("s%ds ", sum[n]);

}

}

for (int index = 0; index < Nn; index++)

printf("%d ", sum[index]);

智能推荐

机器学习实战(七)_loadsimpdata-程序员宅基地

文章浏览阅读265次。title: 机器学习实战(七)date: 2020-04-07 09:20:50tags: [AdaBoost, bagging, boosting, ROC]categories: 机器学习实战更多内容请关注我的博客利用AdaBoost元算法提高分类性能在做决定时,大家可能会吸取多个专家而不是一个人的意见,机器学习也有类似的算法,这就是元算法(meta-algorithm)。元算法是对其他算法进行组合的一种方式。基于数据集多重抽样的分类器前面已经学习了五种不同的分类算法,它们各有优._loadsimpdata

python内置数据结构---字符串_python 中列表 choice.lower()-程序员宅基地

文章浏览阅读217次。字符串str:单引号,双引号,三引号引起来的字符信息。数组array:存储同种数据类型的数据结构。[1, 2, 3], [1.1, 2.2, 3.3]列表list:打了激素的数组, 可以存储不同数据类型的数据结构. [1, 1.1, 2.1, 'hello']元组tuple:带了紧箍咒的列表, 和列表的唯一区别是不能增删改。集合set:不重复且无序的。 (交集和并集)字典dict:{“name”:"westos", "age":10# 1. 字符串str字符串或串(String)是由数字、字母_python 中列表 choice.lower()

超实用可执行程序-PDF文字复制后的回车符去除和谷歌百度英汉翻译-python GUI_文献翻译复制的时候都是回车-程序员宅基地

文章浏览阅读4.1k次,点赞4次,收藏3次。超实用python程序-PDF文字复制后的回车符去除和谷歌百度英汉翻译超实用python程序-PDF文字复制后的回车符去除和谷歌百度英汉翻译痛点界面与功能功能详细说明:过程记录代码和组件分析exe程序生成记录结语痛点PDF文档文字复制会包括回车符,使得文字粘贴和翻译都不方便,尤其是对于双栏的PDF。界面与功能以下为详细说明和..._文献翻译复制的时候都是回车

求正整数N以内的所有勾股数。 所谓勾股数,是指能够构成直角三角形三条边的三个正整数(a,b,c)。_编写程序,计算0到输入的整数n范围内的勾股数。假设3个正整数x、y和z是勾股数,-程序员宅基地

文章浏览阅读291次。#include"stdio.h"void main(){int n;int i,j,k;int count=0;while(scanf("%d",&n)){for(i=1;i<=n;++i)for(j=i+1;j<=n;++j)for(k=j+1;k<=n;++k)if(ii+jj==k*k){printf("[%d,%d,%d], ",i,j,k);count++;}printf(“total number: %d\n”,count);}}_编写程序,计算0到输入的整数n范围内的勾股数。假设3个正整数x、y和z是勾股数,

基于FPGA的BPSK、QPSK以及OQPSK实现_fpga实现bpsk调制-程序员宅基地

文章浏览阅读2.8k次,点赞10次,收藏56次。在现代通信领域中,大多数的信道因具有带通特性而不能直接传送基带信号,为了使数字信号能在带通信道中传输,必须用数字基带信号对载波进行调制,以使信号与信道的特性相匹配。二进制相移键控(BSPK)、正交相移键控(QPSK)、偏置正交相移键控(OQPSK)是重要的调制方式,被广泛地应用于现代通信的各个领域。_fpga实现bpsk调制

编写一个 函数把华氏温度转化为 摄氏温度,转换公式用递归的方法 编写 函数求Fibonacci级数。编写函数求两个数的最大公约数和最小公倍数_编写一个函数,将华氏温度转换为摄氏温度。公式为c=(f-32)×5/9。-程序员宅基地

文章浏览阅读3.1k次,点赞2次,收藏24次。编写一个 函数把华氏温度转化为 摄氏温度,转换公式:C=(F-32)*5/9//编写一个 函数把华氏温度转化为 摄氏温度,转换公式:C=(F-32)*5/9#include<iostream>#include<cmath>using namespace std;double Transform(double F) { return (F - 32) * 5 / 9;}int main() { double F; cout << "请输入华氏._编写一个函数,将华氏温度转换为摄氏温度。公式为c=(f-32)×5/9。

随便推点

ActiveMQ-cpp客户端程序应用异常退出问题_activemq-cpp客户端stop会奔溃-程序员宅基地

文章浏览阅读1.9k次。笔者使用ActiveMQ作为系统中消息分发的服务器,由Java Web程序读取数据库实时记录作为Producer,接收端为C++Builder开发的客户端程序,常驻客户端右下角,弹窗显示实时消息。测试时发现,当客户端断网(网线拔掉)或者服务器重启等连接中断时,客户端会直接退出,windows也没有报程序崩溃的问题,很是费解。 Debug调试代码发现问题出在自定义的Concumer_activemq-cpp客户端stop会奔溃

php中对类中静态方法和静态属性的学习和理解_静态属性和静态方法的特点-程序员宅基地

文章浏览阅读1.7k次。什么是静态方法或静态属性 static关键字声明一个属性或方法是和类相关的,而不是和类的某个特定的实例相关,因此,这类属性或方法也称为“类属性”或“类方法静态方法的特点 1.static方法是类中的一个成员方法,属于整个类,即使不用创建任何对象也可以直接调用! 2.静态方法效率上要比实例化高,静态方法的缺点是不自动进行销毁,而实例化的则可以做销毁。 3.静态方法和..._静态属性和静态方法的特点

遇到问题---thrift--python---ImportError: No module named thrift_importerror:no module named thrift.thrift-程序员宅基地

文章浏览阅读9.1k次。情况我们在启动hbase的thrift服务后使用python来进行测试连接hbase时报错ImportError: No module named thrift。完整报错如下:[root@host252 thrift]# python test.pyTraceback (most recent call last): File "test.py", line 3, in &l..._importerror:no module named thrift.thrift

华科电气专业转计算机专业,华中科技大学转专业-程序员宅基地

文章浏览阅读1k次。关于转专业,华科有两次机会,大一下是可以跨大类转,当然也可以在大类内部转;大二下是只能在学科大类内部转。华科有以下几个大类 信息大类、机械大类、土建环大类、电气大类、文科大类。跨大类转时信息大类与临床医学是不能转入的,但可以通过考光电中法班,通信中英班的方式转入,但是学费要高些,而且毕业是出国的(当然也可以选择不出)。很多同学对船舶与海洋工程不了解,其实这个专业就业非常不错,比信息大类内的不少专业..._华中科技大学转专业机会

spring cloud的RefreshScope注解进行热部署_spring refresh 热部署-程序员宅基地

文章浏览阅读2.5w次,点赞5次,收藏36次。需要热加载的bean需要加上@RefreshScope注解,当配置发生变更的时候可以在不重启应用的前提下完成bean中相关属性的刷新。经由@RefreshScope修饰的bean将会被RefreshScope代理,其关于bean生命周期的相关方法也在此定义。@ManagedOperation(description = "Dispose of the current instanc..._spring refresh 热部署

php抓取网指定内容,php获取网页内容方法总结-程序员宅基地

文章浏览阅读388次。抓取到的内容在通过正则表达式做一下过滤就得到了你想要的内容,至于如何用正则表达式过滤,在这里就不做介绍了,有兴趣的,以下就是几种常用的用php抓取网页中的内容的方法。1.file_get_contentsPHP代码复制代码代码如下:$url="http://www.jb51.net";$contents=file_get_contents($url);//如果出现中文乱码使用下面代码//$getc..._php正则截取file_get_contents里的域名